FAQ政务问答

1、项目说明

政府工作人员往往要做很多政策解读等工作,费时费力还耗费大量的人力,在政府内部,工作人员往往积累了很多问答对,但是不知道怎么构建一个问答系统来辅助工作人员提升日常工作效率,简化工作流程。

本项目基于PaddleNLP FAQ System。

本项目源代码全部开源在 PaddleNLP 中。

如果对您有帮助,欢迎star收藏一下,不易走丢哦~链接指路:https://github.com/PaddlePaddle/PaddleNLP

加入微信交流群,一起学习吧

如有疑问,可加入PaddleNLP的技术交流群(微信),一起交流NLP技术!添加小助手微信,回复NLP即可。

1.1 系统特色

低门槛

手把手搭建检索式 FAQ System

无需相似 Query-Query Pair 标注数据也能构建 FAQ System

效果好

业界领先的检索预训练模型: RocketQA DualEncoder

针对无标注数据场景的领先解决方案: 检索预训练模型 + 增强的无监督语义索引微调

性能快

基于 Paddle Inference 快速抽取向量

基于 Milvus 快速查询和高性能建库

基于 Paddle Serving 高性能部署

应用领域

FAQ问答系统有以下应用领域:

1.智慧城市的疫情问题咨询,一站式办事大厅问答。

2.保险行业的理赔,车险,人身保险等保险产品的问答。

3.电信日常客户的关于手机,咨询等业务的问答。

4.法律民事诉讼,保险理赔问答。

5.金融银行信用卡等业务办理的问答。

2、安装说明

AI Studio平台默认安装了Paddle和PaddleNLP,并定期更新版本。 如需手动更新,可参考如下说明:# 首次更新完以后,重启后方能生效

!pip install --upgrade paddlenlp安装项目依赖的其他库:

备注:如果提示找不到相关文件,左上角刷新即可。!pip install -r requirements.txt首先导入项目所需要的第三方库:import abc

import sys

from functools import partial

import argparse

import os

import random

import time

import numpy as np

#加载飞桨的API

import paddle

import paddle.nn as nn

import paddle.nn.functional as F

import paddlenlp as ppnlp

from paddlenlp.data import Stack, Tuple, Pad

from paddlenlp.datasets import load_dataset, MapDataset

from paddlenlp.transformers import LinearDecayWithWarmup

from paddlenlp.utils.downloader import get_path_from_url

from paddle import inference

3、数据准备

基于公开的疫情政务问答数据集,我们需要准备训练集,评估集和召回库三部分。首先政务数据包含文章,问题,答案,我们选取其中的问题,用中英文回译和模型同义句生成的方法来生成评估集合,训练集则直接使用政务数据中的问题。 训练集示例如下:

宁夏针对哪些人员开通工伤保障绿色通道? 四川金堂税务网上申报率为多少? 普陀如何实时跟踪返沪人员健康信息? 闽政通上的跨省异地就医业务可办理哪些业务子项? 国家卫健委要求什么人员禁止乘坐交通工具? 中国科学院上海有机化学研究所与广西中医药大学联合哪些机构结合中医药特点研发抗新型冠状病毒的药剂? 河北省的单位想要审批投资项目,可以在哪里办理呢? 重庆市使用财政性资金采购进口疫情防控物资如何审批?

评估集是问题对,示例如下:

南昌市出台了哪些企业稳岗就业政策? 南昌市政府出台了哪些企业的稳岗就业政策? 上海宣布什么时间开始开展中小学在线教育,学生不到校? 中小学在线教育什么时候开始,上海开始开始的? 北京市对于中小微企业经贸交流平台展会项目的支持标准是怎样的? 中小微企业经贸交流平台的发展目标 在疫情防控期间怎样灵活安排工作时间? 怎样在防控期间灵活安排工作时间? 湖北省为什么鼓励缴费人通过线上缴费渠道缴费? 为什么要鼓励缴费人通过线上缴费渠道缴费

if(not os.path.exists(‘faq_data.zip’)):

get_path_from_url(‘https://paddlenlp.bj.bcebos.com/applications/faq_data.zip’,root_dir=’.’)

3.1 加载数据

def read_simcse_text(data_path):

“”“Reads data.”""

with open(data_path, ‘r’, encoding=‘utf-8’) as f:

for line in f:

data = line.rstrip()

yield {‘text_a’: data, ‘text_b’: data}

#加载训练集

train_set_file=‘faq_data/data/train.csv’

train_ds = load_dataset(

read_simcse_text, data_path=train_set_file, lazy=False)

#输出三条数据

for i in range(3):

print(train_ds[i])

3.2 构建Dataloader

def convert_example(example, tokenizer, max_seq_length=512, do_evalute=False):

# 把文本转换成id的形式

result = []

for key, text in example.items(): if 'label' in key: # do_evaluate result += [example['label']] else: # do_train encoded_inputs = tokenizer(text=text, max_seq_len=max_seq_length) input_ids = encoded_inputs["input_ids"] token_type_ids = encoded_inputs["token_type_ids"] result += [input_ids, token_type_ids] return result

max_seq_length=64

batch_size=32

model_name_or_path=‘rocketqa-zh-dureader-query-encoder’

tokenizer = ppnlp.transformers.ErnieTokenizer.from_pretrained(model_name_or_path)

trans_func = partial(

convert_example,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # query_input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # query_segment

Pad(axis=0, pad_val=tokenizer.pad_token_id), # title_input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # tilte_segment

): [data for data in fn(samples)]

#明文数据 -> ID 序列训练数据

def create_dataloader(dataset,

mode=‘train’,

batch_size=1,

batchify_fn=None,

trans_fn=None):

if trans_fn:

dataset = dataset.map(trans_fn)

shuffle = True if mode == 'train' else False if mode == 'train': batch_sampler = paddle.io.DistributedBatchSampler( dataset, batch_size=batch_size, shuffle=shuffle) else: batch_sampler = paddle.io.BatchSampler( dataset, batch_size=batch_size, shuffle=shuffle) return paddle.io.DataLoader( dataset=dataset, batch_sampler=batch_sampler, collate_fn=batchify_fn, return_list=True)

train_data_loader = create_dataloader(

train_ds,

mode=‘train’,

batch_size=batch_size,

batchify_fn=batchify_fn,

trans_fn=trans_func)

for idx, batch in enumerate(train_data_loader):

if idx == 0:

print(batch)

break

4、模型选择

首先政务问答场景的数据只有问题和答案对,再没有其他的数据了。如果使用有监督方法,需要问题-问题对,还需要收集一些问题进行人工标注。因此可以考虑使用无监督语义索引技术SimCSE模型。

总体上无监督技术没有有监督技术效果好,所以为了提升SimCSE的性能,我们使用了开放问答领域的预训练语言模型RocketQA,并且在SimCSE的基础上利用WR策略进行优化。

整个方案无需人工参与数据标注,所以是一个无监督的解决方案。

5、模型构建

5.1 SimCSE模型

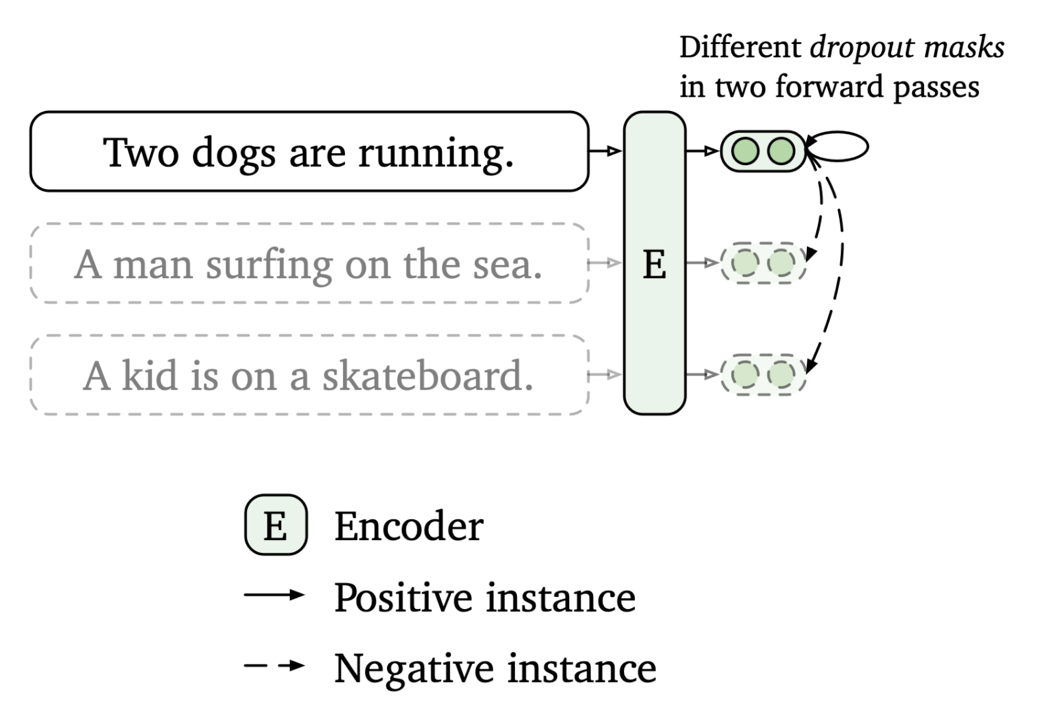

上图是SimCSE的原理图,SimCSE主要是通过dropout来把同一个句子变成正样本(做两次前向,但是dropout有随机因素,所以产生的向量不一样,但是本质上还是表示的是同一句话),把一个batch里面其他的句子变成负样本的。

SimCSE网络结构搭建,搭建代码如下:class SimCSE(nn.Layer):

def init(self,

pretrained_model,

dropout=None,

margin=0.0,

scale=20,

output_emb_size=None):

super().__init__() self.ptm = pretrained_model self.dropout = nn.Dropout(dropout if dropout is not None else 0.1) # if output_emb_size is greater than 0, then add Linear layer to reduce embedding_size, # we recommend set output_emb_size = 256 considering the trade-off beteween # recall performance and efficiency self.output_emb_size = output_emb_size if output_emb_size > 0: weight_attr = paddle.ParamAttr( initializer=paddle.nn.initializer.TruncatedNormal(std=0.02)) self.emb_reduce_linear = paddle.nn.Linear( 768, output_emb_size, weight_attr=weight_attr) self.margin = margin # Used scaling cosine similarity to ease converge self.sacle = scale @paddle.jit.to_static(input_spec=[ paddle.static.InputSpec( shape=[None, None], dtype='int64'), paddle.static.InputSpec( shape=[None, None], dtype='int64') ]) def get_pooled_embedding(self, input_ids, token_type_ids=None, position_ids=None, attention_mask=None, with_pooler=True): # Note: cls_embedding is poolerd embedding with act tanh sequence_output, cls_embedding = self.ptm(input_ids, token_type_ids, position_ids, attention_mask) if with_pooler == False: cls_embedding = sequence_output[:, 0, :] if self.output_emb_size > 0: cls_embedding = self.emb_reduce_linear(cls_embedding) cls_embedding = self.dropout(cls_embedding) cls_embedding = F.normalize(cls_embedding, p=2, axis=-1) return cls_embedding def get_semantic_embedding(self, data_loader): self.eval() with paddle.no_grad(): for batch_data in data_loader: input_ids, token_type_ids = batch_data input_ids = paddle.to_tensor(input_ids) token_type_ids = paddle.to_tensor(token_type_ids) text_embeddings = self.get_pooled_embedding( input_ids, token_type_ids=token_type_ids) yield text_embeddings def cosine_sim(self, query_input_ids, title_input_ids, query_token_type_ids=None, query_position_ids=None, query_attention_mask=None, title_token_type_ids=None, title_position_ids=None, title_attention_mask=None, with_pooler=True): query_cls_embedding = self.get_pooled_embedding( query_input_ids, query_token_type_ids, query_position_ids, query_attention_mask, with_pooler=with_pooler) title_cls_embedding = self.get_pooled_embedding( title_input_ids, title_token_type_ids, title_position_ids, title_attention_mask, with_pooler=with_pooler) cosine_sim = paddle.sum(query_cls_embedding * title_cls_embedding, axis=-1) return cosine_sim def forward(self, query_input_ids, title_input_ids, query_token_type_ids=None, query_position_ids=None, query_attention_mask=None, title_token_type_ids=None, title_position_ids=None, title_attention_mask=None): query_cls_embedding = self.get_pooled_embedding( query_input_ids, query_token_type_ids, query_position_ids, query_attention_mask) title_cls_embedding = self.get_pooled_embedding( title_input_ids, title_token_type_ids, title_position_ids, title_attention_mask) cosine_sim = paddle.matmul( query_cls_embedding, title_cls_embedding, transpose_y=True) # substract margin from all positive samples cosine_sim() margin_diag = paddle.full( shape=[query_cls_embedding.shape[0]], fill_value=self.margin, dtype=paddle.get_default_dtype()) cosine_sim = cosine_sim - paddle.diag(margin_diag) # scale cosine to ease training converge cosine_sim *= self.sacle labels = paddle.arange(0, query_cls_embedding.shape[0], dtype='int64') labels = paddle.reshape(labels, shape=[-1, 1]) loss = F.cross_entropy(input=cosine_sim, label=labels) return loss

5.2 WR 策略

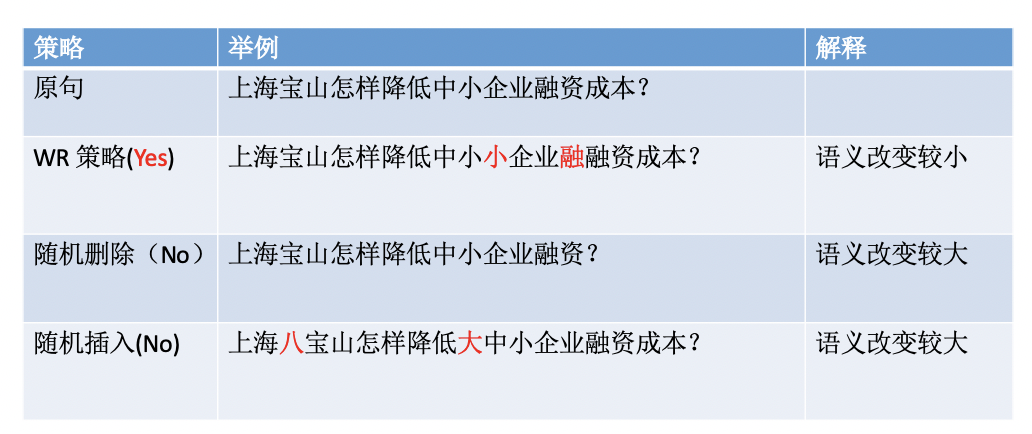

上图是WR策略跟其他策略的简单比较,其中WR策略对原句的语义改变很小,但是改变了句子的长度,破除了SimCSE句子长度相等的假设。WR策略起源于ESimCSE的论文,有兴趣可以从论文里了解其原理。def

word_repetition(input_ids, token_type_ids, dup_rate=0.32):

“”“Word Reptition strategy.”""

input_ids = input_ids.numpy().tolist()

token_type_ids = token_type_ids.numpy().tolist()

batch_size, seq_len = len(input_ids), len(input_ids[0]) repetitied_input_ids = [] repetitied_token_type_ids = [] rep_seq_len = seq_len for batch_id in range(batch_size): cur_input_id = input_ids[batch_id] actual_len = np.count_nonzero(cur_input_id) dup_word_index = [] # If sequence length is less than 5, skip it if (actual_len > 5): dup_len = random.randint(a=0, b=max(2, int(dup_rate * actual_len))) # Skip cls and sep position dup_word_index = random.sample( list(range(1, actual_len - 1)), k=dup_len) r_input_id = [] r_token_type_id = [] for idx, word_id in enumerate(cur_input_id): # Insert duplicate word if idx in dup_word_index: r_input_id.append(word_id) r_token_type_id.append(token_type_ids[batch_id][idx]) r_input_id.append(word_id) r_token_type_id.append(token_type_ids[batch_id][idx]) after_dup_len = len(r_input_id) repetitied_input_ids.append(r_input_id) repetitied_token_type_ids.append(r_token_type_id) if after_dup_len > rep_seq_len: rep_seq_len = after_dup_len # Padding the data to the same length for batch_id in range(batch_size): after_dup_len = len(repetitied_input_ids[batch_id]) pad_len = rep_seq_len - after_dup_len repetitied_input_ids[batch_id] += [0] * pad_len repetitied_token_type_ids[batch_id] += [0] * pad_len return paddle.to_tensor(repetitied_input_ids), paddle.to_tensor( repetitied_token_type_ids)

6.训练配置

#关键参数

scale=20 # 推荐值: 10 ~ 30

margin=0.1 # 推荐值: 0.0 ~ 0.2

max_seq_length=64

epochs=1

learning_rate=5E-5

warmup_proportion=0.0

weight_decay=0.0

save_steps=10

batch_size=64

output_emb_size=256

dup_rate=0.3

save_dir='checkpoints’pretrained_model = ppnlp.transformers.ErnieModel.from_pretrained(model_name_or_path)

model = SimCSE(

pretrained_model,

margin=margin,

scale=scale,

output_emb_size=output_emb_size)

num_training_steps = len(train_data_loader) * epochs

lr_scheduler = LinearDecayWithWarmup(learning_rate, num_training_steps,

warmup_proportion)

#Generate parameter names needed to perform weight decay.

#All bias and LayerNorm parameters are excluded.

decay_params = [

p.name for n, p in model.named_parameters()

if not any(nd in n for nd in [“bias”, “norm”])

]

optimizer = paddle.optimizer.AdamW(

learning_rate=lr_scheduler,

parameters=model.parameters(),

weight_decay=weight_decay,

apply_decay_param_fun=lambda x: x in decay_params)

7. 模型训练

def do_train(model,train_data_loader,**kwargs):

save_dir=kwargs[‘save_dir’]

global_step = 0

tic_train = time.time()

for epoch in range(1, epochs + 1):

for step, batch in enumerate(train_data_loader, start=1):

query_input_ids, query_token_type_ids, title_input_ids, title_token_type_ids = batch

if(dup_rate > 0.0):

query_input_ids,query_token_type_ids=word_repetition(query_input_ids,query_token_type_ids,dup_rate)

title_input_ids,title_token_type_ids=word_repetition(title_input_ids,title_token_type_ids,dup_rate)

loss = model(

query_input_ids=query_input_ids,

title_input_ids=title_input_ids,

query_token_type_ids=query_token_type_ids,

title_token_type_ids=title_token_type_ids)

global_step += 1 if global_step % 5 == 0: print( "global step %d, epoch: %d, batch: %d, loss: %.5f, speed: %.2f step/s" % (global_step, epoch, step, loss, 10 / (time.time() - tic_train))) tic_train = time.time() loss.backward() optimizer.step() lr_scheduler.step() optimizer.clear_grad() if global_step % save_steps == 0: save_path = os.path.join(save_dir, "model_%d" % global_step) if not os.path.exists(save_path): os.makedirs(save_path) save_param_path = os.path.join(save_path, 'model_state.pdparams') paddle.save(model.state_dict(), save_param_path) tokenizer.save_pretrained(save_path) # 保存最后一个batch的模型 save_path = os.path.join(save_dir, "model_%d" % global_step) if not os.path.exists(save_path): os.makedirs(save_path) save_param_path = os.path.join(save_path, 'model_state.pdparams') paddle.save(model.state_dict(), save_param_path) tokenizer.save_pretrained(save_path)

#模型训练

do_train(model,train_data_loader,save_dir=save_dir)

8. 效果评估

def gen_id2corpus(corpus_file):

id2corpus = {}

with open(corpus_file, ‘r’, encoding=‘utf-8’) as f:

for idx, line in enumerate(f):

id2corpus[idx] = line.rstrip()

return id2corpuscorpus_file = ‘faq_data/data/corpus.csv’

id2corpus = gen_id2corpus(corpus_file)

#conver_example function’s input must be dict

corpus_list = [{idx: text} for idx, text in id2corpus.items()]

print(corpus_list[:4])def convert_example_test(example,

tokenizer,

max_seq_length=512,

pad_to_max_seq_len=False):

result = []

for key, text in example.items():

encoded_inputs = tokenizer(

text=text,

max_seq_len=max_seq_length,

pad_to_max_seq_len=pad_to_max_seq_len)

input_ids = encoded_inputs[“input_ids”]

token_type_ids = encoded_inputs[“token_type_ids”]

result += [input_ids, token_type_ids]

return resulttrans_func_corpus = partial(

convert_example_test,

tokenizer=tokenizer,

max_seq_length=max_seq_length)

batchify_fn_corpus = lambda samples, fn=Tuple(

Pad(axis=0, pad_val=tokenizer.pad_token_id), # text_input

Pad(axis=0, pad_val=tokenizer.pad_token_type_id), # text_segment

): [data for data in fn(samples)]

corpus_ds = MapDataset(corpus_list)

corpus_data_loader = create_dataloader(

corpus_ds,

mode=‘predict’,

batch_size=batch_size,

batchify_fn=batchify_fn_corpus,

trans_fn=trans_func_corpus)

for item in corpus_data_loader:

print(item)

breakfrom ann_util import build_index

hnsw_max_elements=1000000

hnsw_ef=100

hnsw_m=100

final_index = build_index(corpus_data_loader, model,output_emb_size=output_emb_size,hnsw_max_elements=hnsw_max_elements,

hnsw_ef=hnsw_ef,

hnsw_m=hnsw_m)def gen_text_file(similar_text_pair_file):

text2similar_text = {}

texts = []

with open(similar_text_pair_file, ‘r’, encoding=‘utf-8’) as f:

for line in f:

splited_line = line.rstrip().split("\t")

if len(splited_line) != 2:

continue

text, similar_text = line.rstrip().split("\t")

if not text or not similar_text:

continue

text2similar_text[text] = similar_text

texts.append({"text": text})

return texts, text2similar_textsimilar_text_pair_file=‘faq_data/data/test_pair.csv’

text_list, text2similar_text = gen_text_file(similar_text_pair_file)

#print(text_list[:2])

#print(text2similar_text)import os

query_ds = MapDataset(text_list)

query_data_loader = create_dataloader(

query_ds,

mode=‘predict’,

batch_size=batch_size,

batchify_fn=batchify_fn_corpus,

trans_fn=trans_func_corpus)

query_embedding = model.get_semantic_embedding(query_data_loader)

recall_result_dir=‘recall_result_dir’

os.makedirs(recall_result_dir,exist_ok=True)recall_num = 10

recall_result_file = ‘recall_result.txt’

recall_result_file = os.path.join(recall_result_dir,

recall_result_file)

with open(recall_result_file, ‘w’, encoding=‘utf-8’) as f:

for batch_index, batch_query_embedding in enumerate(query_embedding):

recalled_idx, cosine_sims = final_index.knn_query(

batch_query_embedding.numpy(), recall_num)

batch_size = len(cosine_sims)

for row_index in range(batch_size):

text_index = batch_size * batch_index + row_index

for idx, doc_idx in enumerate(recalled_idx[row_index]):

f.write("{}\t{}\t{}\n".format(text_list[text_index][

“text”], id2corpus[doc_idx], 1.0 - cosine_sims[

row_index][idx]))recall_N = []

from evaluate import recall

from data import get_rs

similar_text_pair=“faq_data/data/test_pair.csv”

rs=get_rs(similar_text_pair,recall_result_file,10)

recall_num = [1, 5, 10]

for topN in recall_num:

R = round(100 * recall(rs, N=topN), 3)

recall_N.append(str®)

for key, val in zip(recall_num, recall_N):

print(‘recall@{}={}’.format(key, val))

9. 模型推理

example=“南昌市出台了哪些企业稳岗就业政策?”

print(‘输入文本:{}’.format(example))

encoded_inputs = tokenizer(

text=[example],

max_seq_len=max_seq_length)

input_ids = encoded_inputs[“input_ids”]

token_type_ids = encoded_inputs[“token_type_ids”]

input_ids = paddle.to_tensor(input_ids)

token_type_ids = paddle.to_tensor(token_type_ids)

cls_embedding=model.get_pooled_embedding( input_ids=input_ids,token_type_ids=token_type_ids)

#print(‘提取特征:{}’.format(cls_embedding))

recalled_idx, cosine_sims = final_index.knn_query(

cls_embedding.numpy(), 10)

print(‘检索召回’)

for doc_idx,cosine_sim in zip(recalled_idx[0],cosine_sims[0]):

print(id2corpus[doc_idx],cosine_sim)

10 预测部署

10.1 动转静导出

output_path=‘output’

model.eval()

#Convert to static graph with specific input description

model = paddle.jit.to_static(

model,

input_spec=[

paddle.static.InputSpec(

shape=[None, None], dtype=“int64”), # input_ids

paddle.static.InputSpec(

shape=[None, None], dtype=“int64”) # segment_ids

])

#Save in static graph model.

save_path = os.path.join(output_path, “inference”)

paddle.jit.save(model, save_path)

10.2 问答检索引擎

模型准备结束以后,开始搭建 Milvus 的语义检索引擎,用于语义向量的快速检索,本项目使用Milvus开源工具进行向量检索,Milvus 的搭建教程请参考官方教程 Milvus官方安装教程本案例使用的是 Milvus 的1.1.1 CPU版本,建议使用官方的 Docker 安装方式,简单快捷。

10.3 Paddle Serving

部署import paddle_serving_client.io as serving_io

dirname=“output”

model_filename=“inference.get_pooled_embedding.pdmodel”

params_filename=“inference.get_pooled_embedding.pdiparams”

server_path=“serving_server”

client_path=“serving_client”

feed_alias_names=None

fetch_alias_names=“output_embedding”

show_proto=False

serving_io.inference_model_to_serving(

dirname=dirname,

serving_server=server_path,

serving_client=client_path,

model_filename=model_filename,

params_filename=params_filename,

show_proto=show_proto,

feed_alias_names=feed_alias_names,

fetch_alias_names=fetch_alias_names)搭建结束以后,就可以启动server部署服务,使用client端访问server端就行了。具体细节参考代码:https://github.com/PaddlePaddle/PaddleNLP/tree/develop/applications/question_answering/faq_system

微信联系

微信联系